A neural network for Alex

This was a first test for training neural network models.

My goal was to train a Model for stable diffusion based on 16 images of myself.

These were the 16 input images, cropped to a resolution of 512x512:

The training of the model took about 45 Minutes. I used a GPU (A5000) from paperspace, and a paperspace (jupyter) notebook for Dreambooth training from TheLastBen/PPS. The steps I used for training loosely follow what is described here.

Two key concepts apply:

- there are concepts, such as

man, where Stable Diffusion already has base knowledge through its initial training. - there are instances of concepts, such as

alex- a model can be trained on a specific instance (which is what I did using the 16 images above)

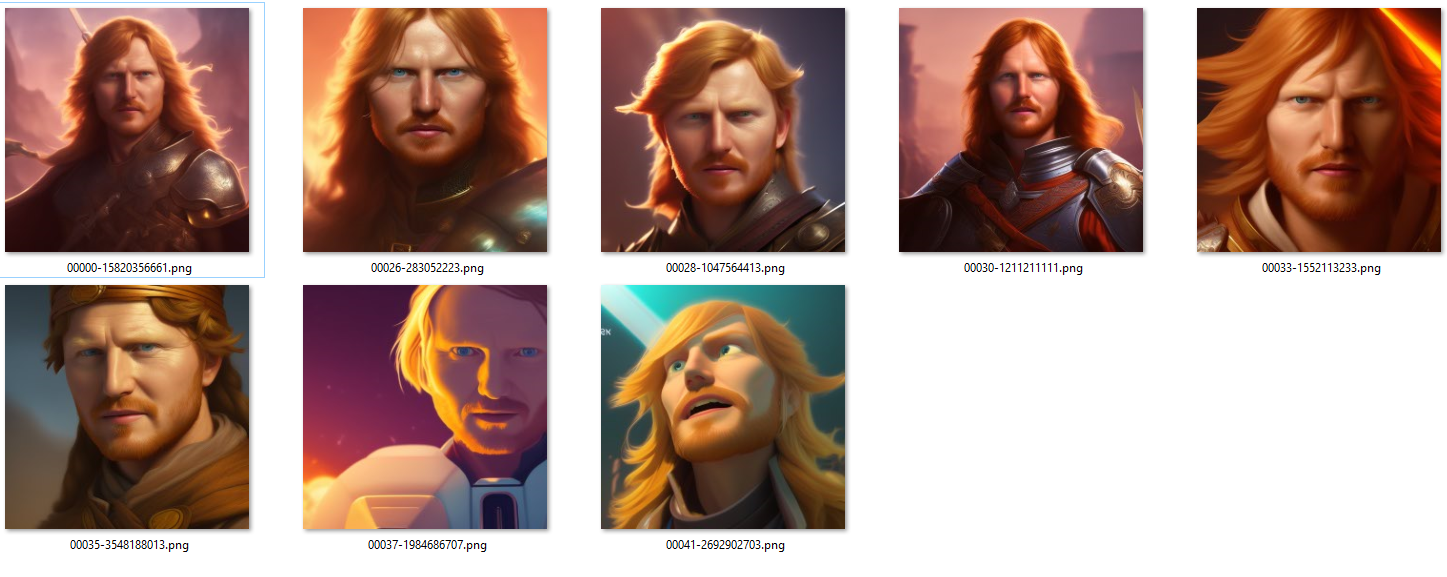

After the training, images can be generated using text prompts.

The first step is to test the model with the prompt photo of alex man

Afterwards, specific prompts can be used to generate other images.

Here is my prompt for (some) of the images above.

face photo of (alex man:1.2) as a knight, long hair, friendly, energetic, artstation, concept art, cinematic lighting, insanely detailed, octane, digital painting, smooth, sharp focus, illustration, vibrant colors, 3d render, photorealistic, hdr, 8k, exquisite, trending on artstation

Note:

alexis replaced with a specific instance token in the real prompt:1.2is a specific weight attached to a concept (in this case, the attention weight is increased)- similar to weights, parentheses

(...)can be used to highlight concept which should get particular attention

I also used the following negative prompt:

((bw)), ((black and white)), blurry, bad, worse, worst, cheap, sketch, basic, juvenile, unprofessional, failure, oil, signature, watermark, amateur, grotesque, misshapen, deformed, distorted, malformed, unsightly, terrible, awful, repellent, disgusting, revolting, loathsome, mangled, awkward, ugly, offensive, repulsive, ghastly, hideous, unappealing, frightful, odious, obnoxious, hateful, vile

The base for these prompts was shared by other authors and emerged from experience.

Afterwards, I just exchanged as a knight with other descriptions, such as as an old coal miner in 19th century or as a peasant, medieval times.

This was a test. The next step is to train specific Models for map making and cartography.

img2img

There are also other approaches to use Stable Diffusion. One test I made was using sattelite imagery and Stable Diffusion img2img generation. Here, both a prompt and an image are used to generate a new image.

Here’s a comparison, with the original satellite image on the left side, and a stable diffusion modified version on the right side.

prompt: aerial image, desert, dunes, dry, sand, rock

.. or, another one for cartoon style, medieval, village, landscape, map:

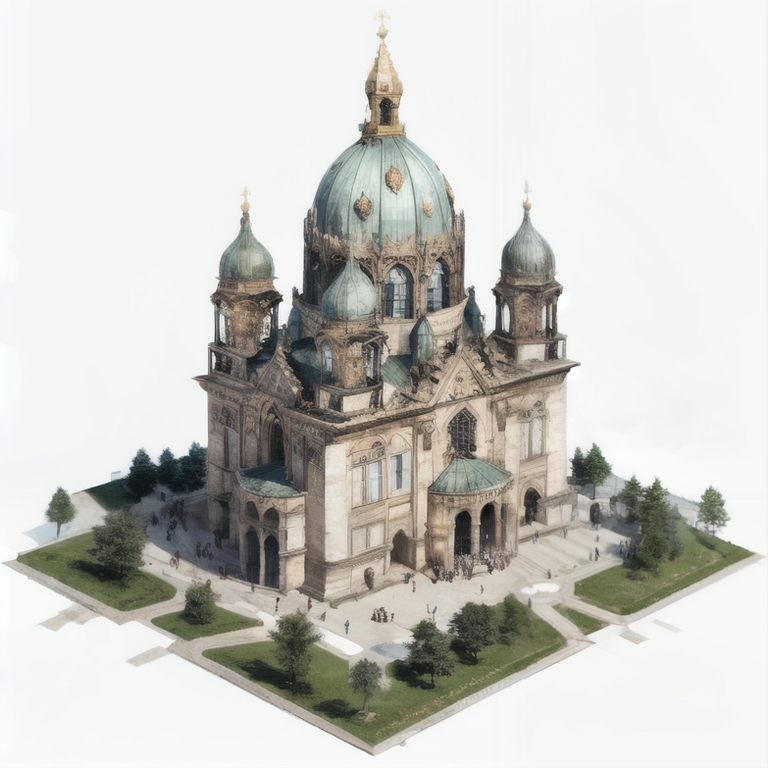

It is also possible to create individual map icons for landmarks. The Frauenkirche, for instance, appears to be known to the original Stable Diffusion 1.5 training data set. At least somewhat known:

While the results can be improved, such as through additional training, the technique has potential for generating background and foreground cartographic material for illustration purposes, for instance landscape or regional planning scenarios (in the wake of climate change).