Download all Features from all layers of a WFS Service

Download all Features from all layers of a WFS Service and save as separate shapefile per layer (e.g. #BfN #LSG)?

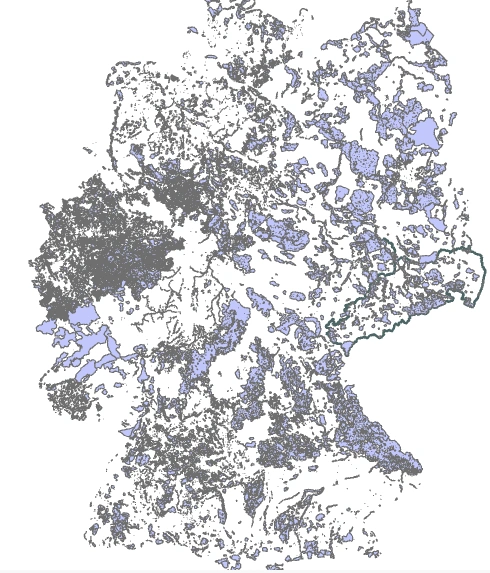

I wanted to get all #WFS features, but neither ArcPro nor QGIS worked here. Wrote a script with #geopandas and #OWSLib and tada, 5 Minutes later..

Opensource! 🚀 👍

These are the steps.

Example to retrieve all WFS Features and store as shapefile with pandas and geopandas.

“BfN Schutzgebiete WFS” https://geodienste.bfn.de/ogc/wfs/schutzgebiet?REQUEST%3DGetCapabilities&SERVICE%3DWFS&VERSION=2.0.0

Setup:

conda create -n wfs_env -c conda-forge

conda activate wfs_env

conda install owslib geopandas requests

First, check capabilities in browser or with:

python get_cap.py

Select your layer, check count.

Edit write_shp.py, update count=1000 and startIndex=ix*1000 - 1000 is an example, update with the maximum number of items to retrieve per iteration, based on the capabilities.

- optionally adapt the WFS format

format='GML'(you can also try removing this parameter for automatic matching) Then run:

python write_shp.py

This will loop through all WFS features:

> https://geodienste.bfn.de/ogc/wfs/schutzgebiet?service=WFS&version=2.0.0&request=GetFeature&typeName=bfn_sch_Schutzgebiet%3ALandschaftsschutzgebiete&count=1000&startIndex=0

> https://geodienste.bfn.de/ogc/wfs/schutzgebiet?service=WFS&version=2.0.0&request=GetFeature&typeName=bfn_sch_Schutzgebiet%3ALandschaftsschutzgebiete&count=1000&startIndex=1000

> https://geodienste.bfn.de/ogc/wfs/schutzgebiet?service=WFS&version=2.0.0&request=GetFeature&typeName=bfn_sch_Schutzgebiet%3ALandschaftsschutzgebiete&count=1000&startIndex=2000

> https://geodienste.bfn.de/ogc/wfs/schutzgebiet?service=WFS&version=2.0.0&request=GetFeature&typeName=bfn_sch_Schutzgebiet%3ALandschaftsschutzgebiete&count=1000&startIndex=3000

.. and concat df’s (pandas (Geo)DataFrames) and finally store features as shapefile. The CRS is not modified and should match the WFS layer’s projection.

Or run:

python write_shp_all.py

This will loop through all layers and retrieve all features and store them in separate shapefiles.

write_shp_all.py:

"""Fetch all features and all layers of a WFS service

and store as a single shapefile per layer.

"""

__version__ = '2024-01-07'

__author__ = 'Alexander Dunkel'

from requests import Request

from owslib.wfs import WebFeatureService

import geopandas as gp

import pandas as pd

# URL for WFS backend

url = "https://geodienste.bfn.de/ogc/wfs/schutzgebiet"

# Initialize

wfs = WebFeatureService(url=url)

layers = list(wfs.contents)

def fetch_features(layer_name, fetch_cnt: int, ix: int) -> gp.GeoDataFrame:

"""Fetch features and return as GeoDataFrame"""

params = dict(service='WFS', version="2.0.0", request='GetFeature',

typeName=layer_name, count=fetch_cnt, startIndex=ix*fetch_cnt)

# Parse the URL with parameters

wfs_request_url = Request('GET', url, params=params).prepare().url

print(wfs_request_url)

# df = gp.read_file(wfs_request_url)

try:

df_new = gp.read_file(wfs_request_url, format='GML')

except ValueError:

return

return df_new

def loop_layer(

layer_name, max_loops: int = 100, item_fetch_cnt: int = 1000) -> gp.GeoDataFrame:

"""Specify the parameters for fetching the data

max_loops: how many iterations per layer max

item_fetch_cnt: specificies amount of rows to return per iteration

(e.g. 10000 or 100); check capabilities in browser first

startIndex: specifies at which offset to start returning rows

"""

df = None

cnt = 0

for ix in range(100):

df_new = fetch_features(layer_name, item_fetch_cnt, ix)

if df_new is None:

print("Empty received, aborting..")

break

new_cnt = len(df_new)

if new_cnt == 0:

# empty result

# all rows fetched?

break

cnt += new_cnt

print (f"Round {ix}, retrieved {cnt} features", end="\r")

if df is None:

df = df_new

else:

df = pd.concat([df, df_new])

if new_cnt < item_fetch_cnt:

break

return df

for layer_name in layers:

# Fetch all features for all layers

print(f'Fetching layer {layer_name}')

df = loop_layer(layer_name=layer_name)

# sanitize layername

layer_name_fine = "".join(x for x in layer_name if x.isalnum())

df.to_file(f'2024-01-07_bfn_{layer_name_fine}.shp')

See a gist here: https://gist.github.com/Sieboldianus/7167d4c93aa073ac327bc03423d4b642