Google AI Studio Prompts: Backup and Restore

I developed two scripts to manage prompts in Google AI Studio. Before describing them, a note on data privacy: Google’s policy states they can access and use all content uploaded to AI Studio. For this reason, I only use it for data and content that is already public.

Why Use AI Studio and Why Backup? I am not a fan of Google. But: Google AI Studio provides a large context window of 1 million tokens. This allows for workflows similar to Retrieval Augmented Generation (RAG), where extensive context can be provided directly within the prompt. Supplying the full context reduces the likelihood of hallucinations and makes the generation process more transparent. It has helped me a great deal with many laborious tasks, particularly where I need to summarize content or compile overviews of large amounts of input text.

Given this, it is a critical requirement to back up prompts together with their full context. A complete backup serves several purposes:

- Documentation: It preserves a record of how information was processed (prompts, answers etc.).

- Reproducibility: The same processing can be applied later with newer models to compare results.

- Restoration: It allows for the recovery of entire processing pipelines.

The restore script’s primary goal is to support data minimization. Prompts and their associated data can be removed online to reduce the data footprint and selectively restored when needed.

Offline Viewing of Prompts

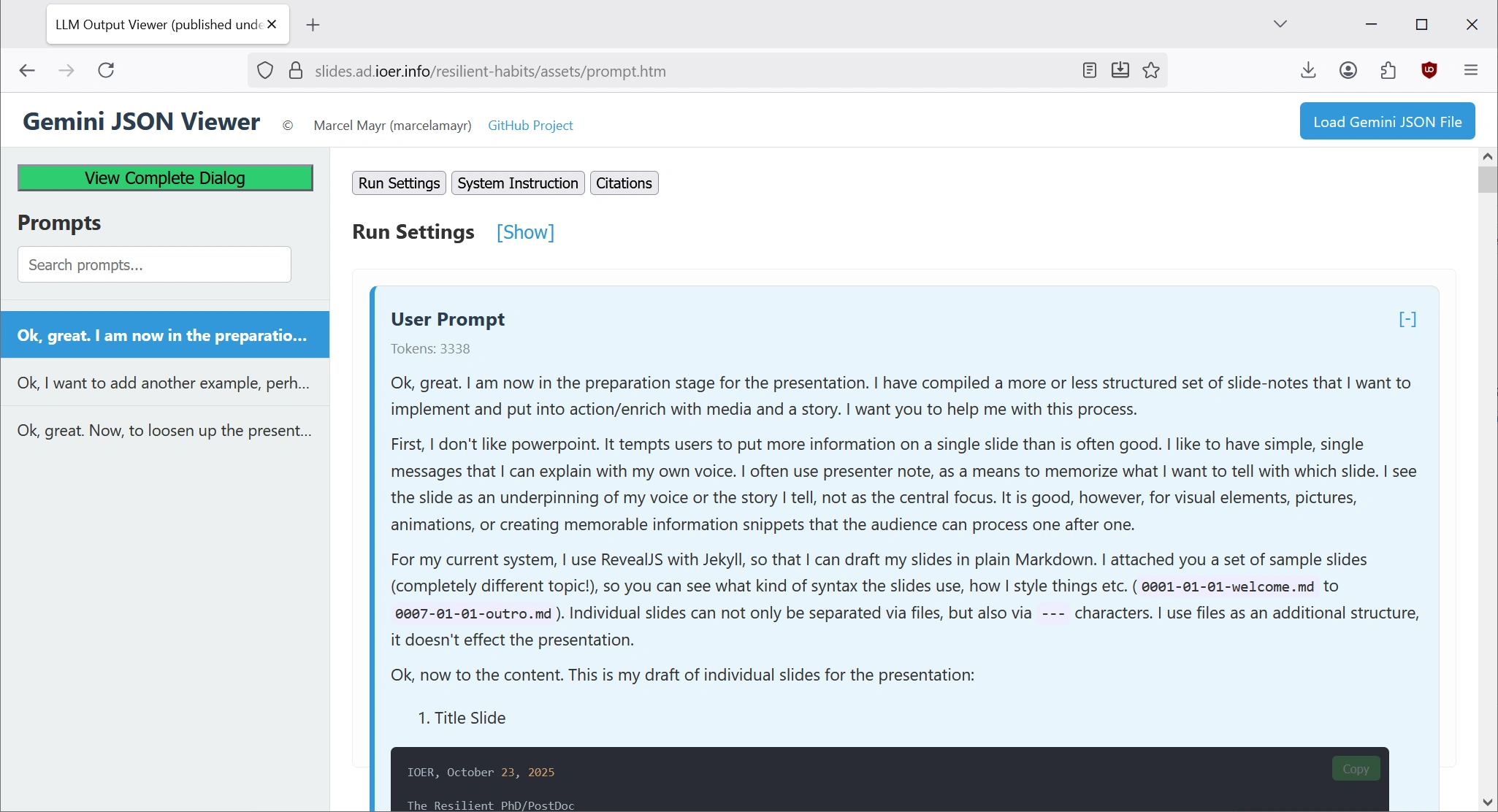

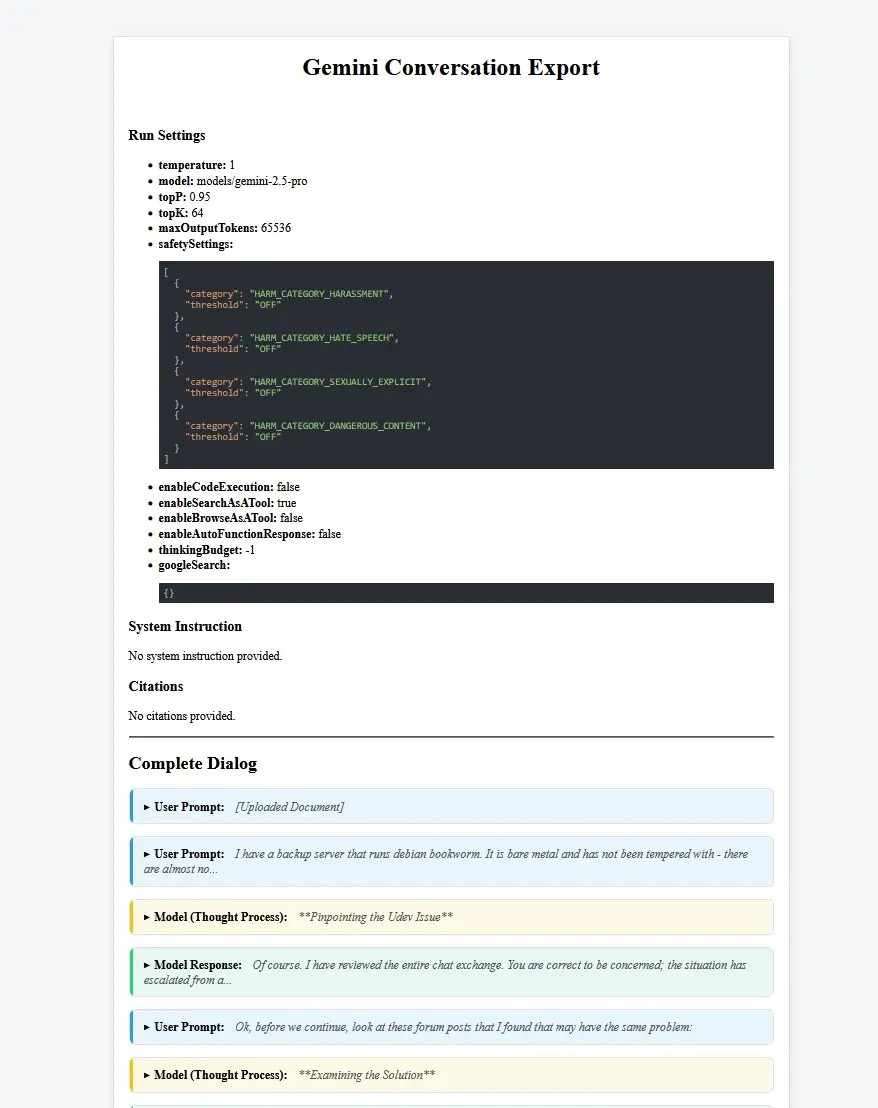

For offline analysis of exported Gemini JSON files, I use and have extended the excellent Gemini-json-Viewer originally created by Marcel Mayr. My version can be found here:

The viewer renders the conversation from an AI Studio JSON file into a clear, interactive HTML view. To support the goals of transparency and documentation that I outlined above, I added a crucial feature: the ability to export the entire conversation to a single, self-contained static HTML file.

This creates a permanent, shareable archive of a prompt’s full context and output. It’s perfect for web sharing or for including as a supplement in publications, ensuring the entire generation process is transparent and reproducible.

The example I used in my presentations was created with this export feature. You can see the live exported file here.

The Scripts ⚙️

The workflow is split into two scripts: one for organizing the backups downloaded from Google Drive, and one for restoring a specific prompt back to AI Studio.

Backup Script

This script processes the Google Drive archive. It sorts the AI Studio JSON files into dated folders and renames them based on their internal title metadata (slug-like). This makes the flat archive more human-readable.

organize_archive_win.sh

#!/bin/bash

# 1. **Proactive Maintenance:** The rclone dedupe command did its job, cleaning up 16 redundant files from your cloud storage automatically.

# 2. **Data Integrity:** The Renaming phase caught and fixed 14 files with problematic names, ensuring the data synced locally was clean and safe.

# 3. **Intelligent Caching:** This is the best part.The script correctly identified that only **one** prompt had actually changed—the very one we are collaborating in (Organizing AI Studio Prompts and Files).It correctly processed that single file.It correctly identified that the other 60 prompts were unchanged and **skipped** them, resulting in the fast, quiet run you wanted.

# 4. **Perfect Summary:** The final summary 61 prompts checked, 1 updated, 60 unchanged is the ultimate proof that the caching logic is working precisely as designed.

# 5. **Stability:** The entire process, from the initial sync to the final organization, completed without any crashes, hangs, or silent exits.

# We are intentionally NOT using 'set -e' for stability.

# --- CONFIGURATION ---

SOURCE_DIR="/tmp/gdrive_sync/Google AI Studio"

ORGANIZED_DIR="/c/your/path/to/backup/folder/GoogleAIStudio_Organized"

GDRIVE_REMOTE="gdrive"

ID_MAP_FILE="/tmp/gdrive_id_map_latest.json"

# --- Caching & Temporary Files ---

MANIFEST_FILE="$ORGANIZED_DIR/.last_run_manifest.txt"

NEW_MANIFEST_FILE="/tmp/current_manifest.txt"

# --- SCRIPT LOGIC ---

if ! command -v jq &> /dev/null; then

echo "Error: 'jq' is not installed." >&2; exit 1;

fi

echo "🗂️ Starting organization of synced AI Studio files."

echo " Source: $SOURCE_DIR"

echo " Destination: $ORGANIZED_DIR"

echo ""

echo "🗺️ Generating fresh Drive ID map..."

rclone lsjson "$GDRIVE_REMOTE":"Google AI Studio" > "$ID_MAP_FILE"

if [ $? -ne 0 ]; then

echo "❌ Error: Failed to generate file map from rclone." >&2; exit 1;

fi

echo "✅ ID Map created successfully."

echo ""

echo "🚀 Processing files and updating organized archive..."

mkdir -p "$ORGANIZED_DIR"

# --- STAGE 1: Identify all prompts for the progress bar ---

echo " -> Identifying prompts to process (stability mode)..."

PROMPT_LIST_FILE="/tmp/prompt_list_to_process.txt"

true > "$PROMPT_LIST_FILE"

for file_path in "$SOURCE_DIR"/*; do

if [ ! -f "$file_path" ]; then continue; fi

if /bin/grep -q -I . "$file_path"; then

if jq -e . "$file_path" >/dev/null 2>&1; then

echo "$file_path" >> "$PROMPT_LIST_FILE"

fi

fi

# STABILITY: This sleep is critical to prevent crashes during the initial scan.

sleep 0.005

done

total_prompts=$(wc -l < "$PROMPT_LIST_FILE")

echo " -> Found $total_prompts prompts to check."

# --- STAGE 2: Process the prompts ---

true > "$NEW_MANIFEST_FILE"

processed_count=0

updated_count=0

skipped_count=0

while IFS= read -r file_path; do

((processed_count++))

prompt_name=$(basename "$file_path")

current_hash=$(md5sum "$file_path" | awk '{print $1}')

# Write the full path to the manifest for an unambiguous key

echo "$current_hash $file_path" >> "$NEW_MANIFEST_FILE"

stored_hash=""

if [ -f "$MANIFEST_FILE" ]; then

# --- THE FINAL, CORRECTED CACHE LOOKUP ---

# Use the proven-correct awk command to find the exact matching path

match=$(awk -v path="$file_path" '{

reconstructed_path = $2;

for (i = 3; i <= NF; i++) {

reconstructed_path = reconstructed_path " " $(i);

}

if (reconstructed_path == path) {

print $1;

}

}' "$MANIFEST_FILE")

stored_hash=$match

fi

if [ -n "$current_hash" ] && [ "$current_hash" == "$stored_hash" ]; then

((skipped_count++))

printf "\r -> Checking prompts: %d/%d processed, %d skipped..." "$processed_count" "$total_prompts" "$skipped_count"

sleep 0.005 # STABILITY

continue

fi

((updated_count++))

echo

echo "---"

echo "Processing prompt: $prompt_name"

echo " -> New or modified file detected. Processing..."

win_path=$(wslpath -w "$file_path")

escaped_win_path="${win_path//\'/\'\'}"

creation_date=$(/c/Windows/System32/WindowsPowerShell/v1.0/powershell.exe -Command "(Get-ItemProperty -LiteralPath '$escaped_win_path').CreationTime.ToString('yyyy-MM-dd')" < /dev/null | tr -d '\r')

slug=$(echo "$prompt_name" | iconv -t ascii//TRANSLIT | sed -r 's/[^a-zA-Z0-9]+/-/g' | sed -r 's/^-+|-+$//g' | tr '[:upper:]' '[:lower:]')

target_folder_name="${creation_date}_${slug}"

target_folder_path="${ORGANIZED_DIR}/${target_folder_name}"

mkdir -p "$target_folder_path"

cp -u "$file_path" "$target_folder_path/"

doc_ids=$(jq -r '.. | .driveDocument?.id | select(. != null)' "$file_path")

if [ -n "$doc_ids" ]; then

for doc_id in $doc_ids; do

file_to_copy=$(jq -r --arg id "$doc_id" '.[] | select(.ID == $id) | .Name' "$ID_MAP_FILE")

if [ -n "$file_to_copy" ]; then

source_file_path="$SOURCE_DIR/$file_to_copy"

if [ -f "$source_file_path" ]; then

cp -u "$source_file_path" "$target_folder_path/"

fi

fi

# STABILITY: This sleep is critical to prevent crashes in the nested loop

sleep 0.01

done

fi

done < "$PROMPT_LIST_FILE"

# Final summary

echo

if [ "$updated_count" -eq 0 ] && [ "$processed_count" -gt 0 ]; then

echo " -> All $processed_count checked prompts were up-to-date."

else

echo " -> Summary: $processed_count prompts checked, $updated_count updated, $skipped_count unchanged."

fi

# Replace the old manifest

mv "$NEW_MANIFEST_FILE" "$MANIFEST_FILE"

# Clean up temporary files

rm "$ID_MAP_FILE"

rm "$PROMPT_LIST_FILE"

echo ""

echo "---"

echo "✅ AI Studio archive is now up-to-date."

Critical: Because I (still) work on WSL1 with Windows, I had to use the following line in the script to parse File Creation dates from NTFS:

creation_date=$(/c/Windows/System32/WindowsPowerShell/v1.0/powershell.exe -Command "(Get-ItemProperty -LiteralPath '$escaped_win_path').CreationTime.ToString('yyyy-MM-dd')" < /dev/null | tr -d '\r')

Under native Linux, this line would be simpler.

Restore Script

This script automates restoring a backed-up prompt (including any associated files like documents or images) to AI Studio. It uploads the necessary files, maps the old file IDs to the new ones, and overwrites a temporary “bait” prompt with the restored content and correct name. The bait prompt step is necessary because there is currently no way to proactively link GDrive Content to AI Studio History.

restore-hijack.sh

#!/bin/bash

# The definitive, human-guided "hijack" restore script.

# It automates the technical steps and pauses for the necessary manual interaction.

set -e

# --- CONFIGURATION ---

GDRIVE_REMOTE="gdrive"

GDRIVE_TARGET_DIR="Google AI Studio"

BACKUP_BASE_PATH="/c/your/path/to/backup/folder/GoogleAIStudio_Organized"

# --- LOAD .ENV FILE ---

# Get the directory where the script is located, regardless of where it's called from

SCRIPT_DIR=$(cd -- "$(dirname -- "${BASH_SOURCE[0]}")" &>/dev/null && pwd)

ENV_FILE="$SCRIPT_DIR/.env"

if [ -f "$ENV_FILE" ]; then

echo " -> Loading configuration from $ENV_FILE"

# Export the variables from the .env file to make them available to the script

export "$(grep -v '^#' "$ENV_FILE" | xargs)"

fi

# --- SCRIPT LOGIC ---

if [ -z "$1" ]; then

echo "❌ Error: You must provide the NAME of the prompt folder to restore." >&2

echo " Usage: ./restore-hijack.sh '2025-09-12_ai-fallacies-over-promising-under-delivering'"

exit 1

fi

# Construct the full path by combining the base path and the provided folder name.

# The `${BACKUP_BASE_PATH%/}` part removes a trailing slash from the base path,

# if it exists, to prevent double slashes.

LOCAL_PROMPT_DIR="${BACKUP_BASE_PATH%/}/$1"

if [ ! -d "$LOCAL_PROMPT_DIR" ]; then

echo "❌ Error: Directory not found: '$LOCAL_PROMPT_DIR'" >&2

exit 1

fi

# --- STAGE 1: Find Prompt and Extract Context ---

ORIGINAL_PROMPT_FILE=""

for file in "$LOCAL_PROMPT_DIR"/*; do

if [ -f "$file" ] && jq -e . "$file" >/dev/null 2>&1; then

ORIGINAL_PROMPT_FILE=$file

break

fi

done

if [ -z "$ORIGINAL_PROMPT_FILE" ]; then

echo "❌ Error: Could not find a valid JSON prompt file in '$LOCAL_PROMPT_DIR'." >&2

exit 1

fi

PROMPT_FILENAME=$(basename "$ORIGINAL_PROMPT_FILE")

PROMPT_CONTEXT=$(jq -r '.chunkedPrompt.chunks[] | select(.role == "user" and .text) | .text' "$ORIGINAL_PROMPT_FILE" | head -n 3 | sed 's/"/\\"/g')

if [ -z "$PROMPT_CONTEXT" ]; then

PROMPT_CONTEXT="<No text found, using filename as context>"

fi

# --- STAGE 2: Guide the User to Create the "Bait" Prompt ---

echo "------------------------------------------------------------"

echo "STEP 1: CREATE A 'BAIT' PROMPT IN AI STUDIO"

echo "------------------------------------------------------------"

echo "I will now pause. Please perform the following steps:"

echo "1. Go to Google AI Studio and create a NEW, BLANK prompt."

echo "2. To help AI Studio create a similar name, copy and paste the following"

echo " text into the first user input box:"

echo " -------------------- CONTEXT --------------------"

echo " Prompt Name: $PROMPT_FILENAME"

echo " Initial Text: $PROMPT_CONTEXT"

echo " -----------------------------------------------"

echo "3. Click 'Run' and then SAVE the prompt."

read -r -p "Press ENTER here when you have saved the new prompt in AI Studio..."

echo "------------------------------------------------------------"

# --- STAGE 3: Find the "Bait" Prompt ---

echo " -> Searching for the 'bait' prompt you just created..."

BAIT_PROMPT_NAME=$(rclone lsjson "$GDRIVE_REMOTE:$GDRIVE_TARGET_DIR" | jq -r '[.[] | select(.IsDir == false)] | sort_by(.ModTime) | .[-1].Name')

if [ -z "$BAIT_PROMPT_NAME" ]; then

echo "❌ Error: Could not find any prompts on Google Drive." >&2

exit 1

fi

echo " ✅ Found bait prompt: '$BAIT_PROMPT_NAME' (the newest file)."

# --- STAGE 4: Prepare and Hijack ---

echo " -> Preparing the re-linked JSON payload from backup..."

PAYLOAD_FILE="/tmp/restored_payload.json"

cp "$ORIGINAL_PROMPT_FILE" "$PAYLOAD_FILE"

ID_MAP_REPLACE_FILE="/tmp/id_replace_map.txt"

true >"$ID_MAP_REPLACE_FILE"

CONTENT_FILE_LIST=$(find "$LOCAL_PROMPT_DIR" -maxdepth 1 -type f -not -name "$PROMPT_FILENAME")

if [ -z "$CONTENT_FILE_LIST" ]; then

echo " - No separate content files found to upload."

else

echo "$CONTENT_FILE_LIST" | while read -r file_path; do

filename=$(basename "$file_path")

echo " - Uploading content: '$filename'..."

rclone copyto "$file_path" "$GDRIVE_REMOTE:$GDRIVE_TARGET_DIR/$filename"

done

echo " - Pausing for 10 seconds to allow Google Drive to index new files..."

sleep 10

echo " - Building ID map..."

REMOTE_FILES_JSON=$(rclone lsjson "$GDRIVE_REMOTE:$GDRIVE_TARGET_DIR")

mapfile -t OLD_IDS < <(jq -r '.chunkedPrompt.chunks[] | (.driveDocument?.id, .driveImage?.id) | select(. != null)' "$ORIGINAL_PROMPT_FILE")

mapfile -d '' CONTENT_FILES < <(find "$LOCAL_PROMPT_DIR" -maxdepth 1 -type f -not -name "$PROMPT_FILENAME" -print0)

for i in "${!CONTENT_FILES[@]}"; do

local_file_path=${CONTENT_FILES[$i]}

old_id=${OLD_IDS[$i]}

local_filename=$(basename "$local_file_path")

new_id=$(echo "$REMOTE_FILES_JSON" | jq -r --arg name "$local_filename" '.[] | select(.Name == $name) | .ID')

if [ -n "$new_id" ] && [ -n "$old_id" ]; then

echo "s/$old_id/$new_id/g" >>"$ID_MAP_REPLACE_FILE"

echo " - Mapped '$local_filename': $old_id -> $new_id"

else

echo " ⚠️ Warning: Could not find a new ID for content file '$local_filename'."

fi

done

sed -i -f "$ID_MAP_REPLACE_FILE" "$PAYLOAD_FILE"

fi

echo " ✅ Payload ready."

echo " -> Overwriting the content of the 'bait' prompt..."

rclone copyto "$PAYLOAD_FILE" "$GDRIVE_REMOTE:$GDRIVE_TARGET_DIR/$BAIT_PROMPT_NAME"

echo " ✅ Hijack complete."

# --- STAGE 5: AUTOMATICALLY RENAME THE PROMPT ---

echo " -> Renaming the prompt on Google Drive..."

# The desired final name is the name of the local folder

FINAL_PROMPT_NAME=$(basename "$LOCAL_PROMPT_DIR")

# Use 'moveto' to perform the rename operation

rclone moveto "$GDRIVE_REMOTE:$GDRIVE_TARGET_DIR/$BAIT_PROMPT_NAME" "$GDRIVE_REMOTE:$GDRIVE_TARGET_DIR/$FINAL_PROMPT_NAME"

echo " ✅ Renamed to '$FINAL_PROMPT_NAME'."

# --- CLEANUP ---

rm "$PAYLOAD_FILE" "$ID_MAP_REPLACE_FILE"

echo "------------------------------------------------------------"

echo "✅ Restore process complete."

echo

echo "FINAL STEP:"

echo "1. Go to https://aistudio.google.com/app/prompts"

echo "2. Find and open the prompt named '$FINAL_PROMPT_NAME'."

echo "3. It should now contain the fully restored content."

Setup and Usage

The script requires rclone as a dependency and it must be setup with a functioning Google API key.

Both scripts are path-agnostic and can be placed anywhere. Configuration is handled via a .env file located in the same directory as the scripts.

Create a

.envfile in the same folder as the scripts. You can copy the example below.Adjust the variables in the

.envfile to match your setup (GDRIVE_REMOTE,GDRIVE_TARGET_DIR, andBACKUP_BASE_PATH).

# .env - Configuration for the backup and restore script

# These values will override the defaults in the script.

GDRIVE_REMOTE="gdrive"

GDRIVE_TARGET_DIR="Google AI Studio"

BACKUP_BASE_PATH="/path/to/your/GoogleAIStudio_Organized/"

- Run the Backup script

I have added the backup script to my daily.sh file, a file that I run on a daily basis.

#!/usr/bin/env bash

# Exit on error

set -e

# Get the directory where daily.sh is located to reliably find organize_archive.sh

SCRIPT_DIR=$( cd -- "$( dirname -- "${BASH_SOURCE[0]}" )" &> /dev/null && pwd )

# Define the target sync folder

SYNC_DIR="/tmp/gdrive_sync"

mkdir -p "$SYNC_DIR"

# === RCLONE SYNC STAGE ===

# Change to the target directory

cd "$SYNC_DIR" || exit

# --- Google AI Studio Sync ---

echo "---"

echo "🔄 Preparing to sync Google AI Studio..."

# STEP 1: Deduplicate the remote source to prevent local file conflicts.

echo "⚠️ Deduplicating remote source (renames older duplicate files)..."

rclone dedupe --dedupe-mode rename gdrive:"Google AI Studio"

# STEP 2: Sync the now-clean source.

rclone sync --auto-confirm --create-empty-src-dirs gdrive:"Google AI Studio" "./Google AI Studio" \

--bwlimit 4325k -P --log-level ERROR

# === SANITIZE FILES STAGE ===

# this is necessary because some AI Studio json end with a dot, which is not supported

# as file or folder names in Windows/NTFS

find "$SYNC_DIR" -type f -name '*.' | while read -r file; do

# Remove trailing dot

newname="${file%?}"

if [[ -e "$newname" ]]; then

echo "Deleting duplicate with dot: '$file'"

rm -- "$file"

else

echo "Renaming: '$file' -> '$newname'"

mv -- "$file" "$newname"

fi

done

echo "✅ Google Drive sync completed."

echo "---"

# === (OPTIONAL) ORGANIZE AI STUDIO ARCHIVE STAGE ===

read -p "Do you want to run the full organization for the AI Studio archive? (y/n) " -n 1 -r

echo # Move to a new line

if [[ "$REPLY" =~ ^[Yy]$ ]]; then

"$SCRIPT_DIR/organize_archive_win.sh"

else

echo "Skipping organization step."

fi

# Final success message

echo "✅ Daily script finished."

As you can see, there’s a critical line added to this:

rclone dedupe --dedupe-mode rename gdrive:"Google AI Studio"

Google Drive supports files with the same name, as it disambiguates on file id, not name. However, locally, files with the same name are not allowed. The rclone line will rename these files on GDrive first. They will keep working in GDrive because their ID remains the same.

Here’s a sample log:

🔄 Preparing to sync Google AI Studio...

⚠️ Deduplicating remote source (renames older duplicate files)...

Transferred: 457.091 KiB / 457.091 KiB, 100%, 256.001 KiB/s, ETA 0s

Checks: 29 / 29, 100%

Deleted: 2 (files), 0 (dirs), 457.091 KiB (freed)

Transferred: 2 / 2, 100%

Elapsed time: 2.4s

✅ Google Drive sync completed.

---

Do you want to run the full organization for the AI Studio archive? (y/n) y

🗂️ Starting organization of synced AI Studio files.

Source: /tmp/gdrive_sync/Google AI Studio

Destination: /path/to/your/GoogleAIStudio_Organized/

🗺️ Generating fresh Drive ID map...

✅ ID Map created successfully.

🚀 Processing files and updating organized archive...

-> Identifying prompts to process (stability mode)...

-> Found 7 prompts to check.

-> Checking prompts: 7/7 processed, 7 skipped...

-> All 7 checked prompts were up-to-date.

---

✅ AI Studio archive is now up-to-date.

✅ Daily script finished.

- To run the restore script, execute it from your terminal. Provide the name of the backup folder for which you wish to restore the prompt archive back to AI Studio:

./restore-hijack.sh '2025-09-12_ai-fallacies-over-promising-under-delivering'

The script will then guide you through the interactive steps required.

Here’s an example log:

./restore-hijack.sh "2025-10-28_postdoc-survival-tame-information-flood"

-> Loading configuration from .env

------------------------------------------------------------

STEP 1: CREATE A 'BAIT' PROMPT IN AI STUDIO

------------------------------------------------------------

I will now pause. Please perform the following steps:

1. Go to Google AI Studio and create a NEW, BLANK prompt.

2. To help AI Studio create a similar name, copy and paste the following

text into the first user input box:

-------------------- CONTEXT --------------------

Prompt Name: Postdoc Survival: Tame Information Flood

Initial Text: I would like to offer a meeting/workshop/learning session in our scientific institution for postdocs and phd to cope with the daily information flood: I am a Postdoc in my 8th year and survived so far, also (I think) because I have a number of daily routines and best practices that help me survive:

-----------------------------------------------

3. Click 'Run' and then SAVE the prompt.

Press ENTER here when you have saved the new prompt in AI Studio...

------------------------------------------------------------

-> Searching for the 'bait' prompt you just created...

✅ Found bait prompt: 'Taming The Information Flood' (the newest file).

-> Preparing the re-linked JSON payload from backup...

- Uploading content: '0001-01-01-welcome.md'...

- Uploading content: '0002-01-01-intro.md'...

- Uploading content: '0003-01-01-why.md'...

- Uploading content: '0004-01-01-retrieval.md'...

- Uploading content: '0005-01-01-visualization.md'...

- Uploading content: '0006-01-01-chore.md'...

- Uploading content: '0007-01-01-outro.md'...

- Uploading content: 'cv_alexanderdunkel.pdf'...

- Pausing for 10 seconds to allow Google Drive to index new files...

- Building ID map...

- Mapped '0001-01-01-welcome.md': 1fA9k-7oPqRsT6uVwXyZ1aB2cD3eF4gH5 -> 1bCrvs-JesMP1SCfXTKzGwJP7TtklvQMp

- Mapped '0002-01-01-intro.md': 1-muVPS0sb3UaK2U0_IgW9GB12yJ_n34NH -> 1q8KPakfM4L1I0rvYbvWzdHkgmmzIosO_

- Mapped '0003-01-01-why.md': 1jQwUQ4DnhS9W0KuXSFoPfA5a-JQEe9zW -> 1BiK369ULEwHcPZ9K-i8pxyVU7A4xi5Nl

- Mapped '0004-01-01-retrieval.md': 1gH5iJ6kL7mN8oP9qR1sT2uV3wX4yZ5a -> 1sgmnRdBaQ5bTWfOhoq1kQlcKgSasKj78

- Mapped '0005-01-01-visualization.md': 1kL7mN8oP9qR1sT2uV3wX4yZ5aB6cD7e -> 1jk2FUirydr3sjFtMGg0AWn4C-bgRzUyb

- Mapped '0006-01-01-chore.md': 1hb_Z6w2TEH_SqF4IaDmFZ9ruBIC7ZQK- -> 1rS9tUvWxYzAbCdEfGhIjKlMnOpQrStU

- Mapped '0007-01-01-outro.md': 1YpYEkhOjL0v7k4Q7b5KMD50eaO0FWx7K -> 1JHJy1IxWdTEInOWk6GiuhfAZCDEWx-Cg

- Mapped 'cv_alexanderdunkel.pdf': 1MuH3ssQ72wC9e7zyB_19KZITvyJUBfLt -> 1dEsKxr71Z7PaSIiLaYimmjrbcQ0QHc0z

✅ Payload ready.

-> Overwriting the content of the 'bait' prompt...

✅ Hijack complete.

-> Renaming the prompt on Google Drive...

✅ Renamed to '2025-10-28_postdoc-survival-tame-information-flood'.

------------------------------------------------------------

✅ Restore process complete.

FINAL STEP:

1. Go to https://aistudio.google.com/app/prompts

2. Find and open the prompt named '2025-10-28_postdoc-survival-tame-information-flood'.

3. It should now contain the fully restored content.

It may be necessary to scroll through the full prompt-history to re-initialize tokens per linked context document.